Whisper's Word-Level Timestamps are Out

Bid farewell to extensive waiting periods—finally, it has arrived!

Hello, fellow tech and language enthusiasts! Today, we embark on a captivating journey into the domain of speech-to-text technology, where the remarkable creation known as Whisper, brought forth by OpenAI, has recently unveiled a remarkable advancement: word-level timestamps.

Now allow me to simplify this for you. Whisper, an impressive automated speech recognition (ASR) technology, has brought about a profound transformation in the way our video subtitling and transcription service functions. Initially, it offered timestamps at the segment level, enabling us to precisely identify when specific audio segments were spoken. However, the advent of word-level timestamps has ushered in a significant shift, propelling Whisper into a realm of unparalleled advancement.

We're discussing a mind-boggling performance that can pinpoint the exact instant that each and every word that is spoken. No longer will we need to play guessing games with audio sections. This innovation by Whisper introduces an entirely new level of use case and potential leaving behind any doubts or uncertainties.

Imagine the possibilities this presents. No longer is it necessary to tediously sweep through lengthy audio recordings in search of a single piece of information. Now that word-level timestamps are available, navigation is incredibly straightforward. Want to find the quote that made your mind glow with inspiration? Viola! Whisper will keep your back covered.

Some of the Applications of Word Level Timestamps:

Videos for All-Accessibility First: Expand Your Reach, Embrace Accessibility. With accurate transcriptions, make your videos inclusive for the hearing impaired and comply with regulations, reaching a wider audience effortlessly.

Video SEO Optimization: Harness word-level timestamps and transcriptions to uncover key phrases and keywords in your videos. Elevate your search engine optimization and skyrocket your video visibility in search results.

Content Monetization: By utilising detailed transcriptions and timestamps, you can efficiently determine the optimal ad placements within your videos and podcasts. Connect advertisers with the appropriate keywords and topics. Your entertainment content will generate more revenue if you utilise targeted advertising. Don't let your content go untapped - seize the opportunity for profitable growth today!

Note

On a side note, an alternative to whisper is Whisper-X, developed by Oxford researchers, is a word-level timestamps solution that enhances transcriptions and timestamps along with features like speaker diarization and fast batched inference. However, its limitation lies in supporting only a select range of languages, including English, Spanish, French, German, Italian, Portuguese, Dutch, Russian, Mandarin Chinese, and Japanese. Language support may change over time, so it's advised to refer to the latest documentation for updates.

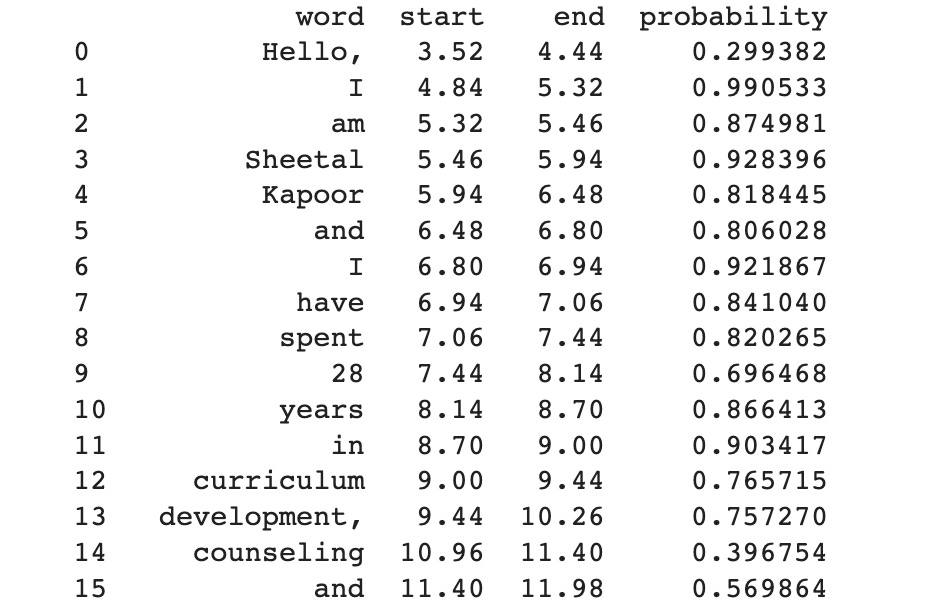

Let’s look at how this looks like,

For the readers to try, I've developed a colab notebook that enables you to generate word-level timestamps using OpenAI whisper.

Suggestion

While Whisper does offer word-level timestamps for both translation and transcription, it is advisable to choose transcription instead of translation for accurate alignment. This is because the translation of a phrase or sentence from language XX to English may or may not match the exact timestamps of the transcribed version.

For your information, translation typically refers to converting speech audio in any language XX into English text, while transcription refers to converting speech audio in language XX into text in the same language XX.

For instance, consider this Dubverse TTS generated Hindi audio,

Transcription: "आज का होमवर्क क्या है?"

Translation: "What is today's homework?"

However, आज does not mean "What" in English; आज means "today."

Consequently, in most XX language to English translation use cases, word-level timestamps may not be reliable.

🎙️ Have you tried the word-level timestamps feature of OpenAI's Whisper ASR system? Please leave your opinions in the comments! No more scrolling through several open-source sources and being disappointed with inadequate word-level timestamps.

🌟 Also, did you know that at Dubverse, we're pioneers in speech-to-text and translation models fueled by the latest LLMS? Stay ahead with our cutting-edge releases and give us a try! Your voice, our technology—unstoppable together!

Join our Discord community to get the scoop on the latest in Audio Generative AI!

Until next time,

Pranav