A State-of-the-Art Survey of Text-to-Speech Technology 2023

Speaking Machines: Foundation Audio Generation Models

The Latest innovation in the Generative AI field is among the Speech Models. Nowadays it is very difficult to find the difference between AI Generated and human voices but even songs! TTS technology is in AI for so long, so what changed?

Suno Chirp model Samples:

Self Supervised Learning have taken the machine learning algorithms by a storm and every pre-existing tech is trying to use it to get to a better quality. Same is with the TTS systems.

Self Supervised Learning in Speech

The paradigm of training the supervised text-to-speech models changed when Meta dropped the amazing findings in Spoken Language Modeling, which showed how large SSL-based pre-trained models learn representation of phonemes when trained on large amounts of speech data. These are high abstract phonemes that can be obtained directly from speech without needing to align the text spoken in the speech. Another way to get these phonemes is to train VQ-VAE, the quantized codebook acts as phonemes in this case.

These phonemes are also called Semantic Tokens. This unlocks the potential to leverage huge amounts of unlabelled data, which was previously hidden because of the dependency on labelled data and manually created phonemes.

The TTS system is divided into 3 Parts:

Text to Semantic Tokens Model (M1):

To map the text to semantic tokens, a gpt like autoregressive model or an encoder-decoder transformer can be used. This task can be looked as translating the textual information to semantic information. Since Semantic tokens are speaker dependent because different speakers will say the same content in different prosodies and duration, this is why a speaker encoder is attached to this module. M1 model is also responsible to control the length of the audio.

Semantic Tokens to Mel-Spectogram/Acoustic Tokens Model (M2):

Once we have the semantic tokens via SSL from Audio files (Or M1 incase of inference), we can use these semantic tokens to generate Mel-spectogram or Acoustic tokens (refer to Encodec)

Mel-Spectogram/Acoustic Tokens to Audio wav (Vocoder):

The output of M2 model is represented in an intermediate representation, this needs to be converted back to an audio wav.

The model used here depends upon the middle representation used in M2.

The two most used representation are:

1. Mel-spectorgram: 80 bin filter bank processed over STFT, this is

representing audio in time-frequency domain.

2. Neural audio codec: Deep learning model trained to represent audio in continuous and discrete representations

Let’s discuss these pipelines with SOTA models in the Open Source Community.

TORTOISE TTS

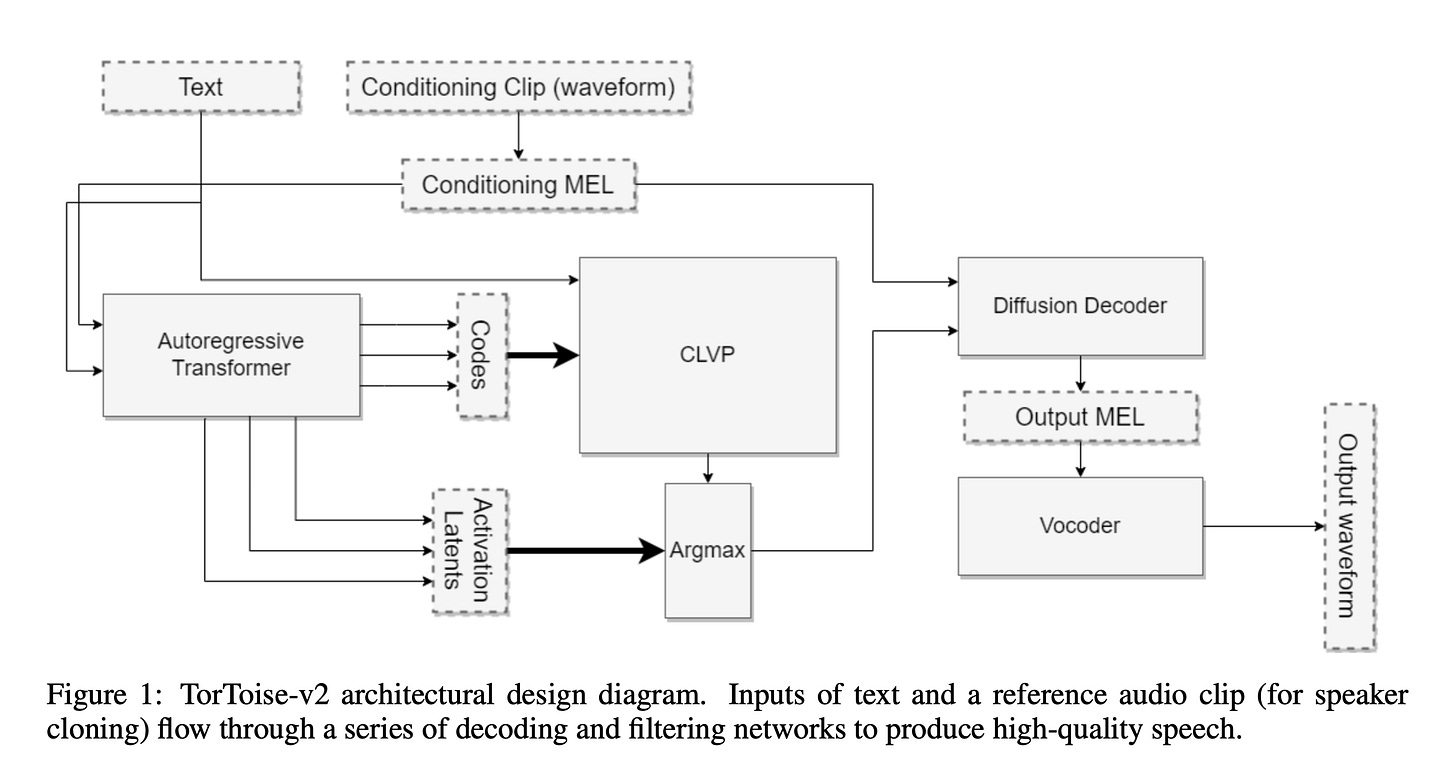

Tortoise tts takes inspiration from DALLE (which also works in a similar M1 and M2 Fashion) and improves it by using a diffusion model in the place for M2. The model consists of attention layers and cnn layers, and is not a U-Net model like in other diffusion papers. M2 works on Semantic tokens to generate the Mel spectrogram. The produced Mel spectrogram is converted to waveform using Univnet vocoder

The Semantic Tokens are first extracted by training VQVAE model on the training dataset. This step produces 8192 phonemes in an Unsupervised manner.

The text-to-semantic tokens model M1 is a gpt-2 like decoder only model.

Lastly after training the M1 and M2 models, the M2 model is finetuned on the M1 latents for an increase in the overall quality in the produced audio.

Tortoise also have a CLVP (Contrastive Language Voice Pretrained Transformer) which is used to re-rank the outputs of M1 to give a more expressive outputs. It takes Semantic tokens and text to produce a score.

Hence the inference of the model have the following steps:

Generate Large number of Semantic Tokens from M1 using different sampling techniques.

Re-rank the generated samples using CLVP model

Select the top k speech candidates.

Decode the Melspectogram using M2

Convert to waveform using Univnet vocoder

Tortoise is trained on 40k hours of English Apple Podcasts data.

Tortoise inference showed Language understanding, and zero shot capabilities in a robust manner. The outputs are expressive and are able to capture the tonality really well though the training recipe of the model is not public (Something interesting is cooking here… subscribe to know what’s coming)

The Inference is very slow for this model, hence named (Tortoise-tts), but new approaches have come which took the inspiration from tortoise and used Gan instead of a diffusion model, this gan directly produces audio-waveform from Semantic tokens, its like ditching the M2 model. One such example is XTTS, here M1 produces text to semantic tokens and then directly a hifi gan vocoder is used with speaker_encoder and semantic tokens to produce the waveform, this is blazing Fast.

Combine the above approach with streamable LLMs for M1 decoder only model. This will give us streamable high quality TTS.

BARK

Bark follow a Language Modeling approach to Audio Generation, and is inspired by AudioLM. AudioLM uses w2v-BERT to extract 10,000 Semantic tokens.

The audio representation used here is of discrete neural audio codec. The Audio Codec gives 8 different codes (C1 C2 …. C8, 1 for each quantizer out of a vocabulary of 1024) this configuration is based on Bark model training and can be different for different models. The First code captures the most information and the following offers the same information in a hierarchy fashion.

Bark uses 2 decoder-only Language models and 1 non auto-regressive model to train the end to end tts pipeline.

M1 model takes text and outputs the Semantic tokens in an autoregressive manner.

M2 here is divided into two parts:

Takes semantic token and produces the C1 and C2 of the target codes in an autoregressive manner.

Takes C1 and C2 and output all the 8 codes in a non autoregressive manner.

The generated codes are fed to Audio codec (Encodec in this case) to generate the waveform.

Bark is trained on a proprietary dataset, which seems to contain a lot of languages, music and tags for sounds (softly highlights YouTube :p)

Bark is train on multiple languages and have zero shot voice cloning capabilities. It is also able to generate music based on certain text inputs, which is mainly data dependent. when you scale data, the semantic tokens captures the essence of different languages and music via SSL.The training recipe of the model is not public but the power of Open source community was able to crack the training recipe for Bark-finetuning

Bark being a autoregressive model hallucinates a lot as compared to Tortoise-tts but is a bit faster and easier to train than tortoise, because of the simplicity.

Now with streamable LLMs are discovered it will be good to see how it affects the quality and inference speed of Bark.

Conclusion

The M1 and M2 model approach can be seen various other TTS papers like Spear-TTS, SoundStorm. This approach can easily scale to Cross Lingual Voice Cloning by simply increasing the data. The same approach goes for Multi-modality of the models, where semantic tokens are extracted from images audio text and even videos! have written a blog on the same.

We run an active community on Discord where give our scoop on the latest in audio generative AI.

Until next time,

Jaskaran Singh

Follow me for more Deep learning coverage on LinkedIn, Twitter