[Beta] Foundational Speech Model for India

Building the Future of Multilingual AI: Foundational Speech Models for India"

Hello everyone and welcome to the most anticipated launch for Dubverse.ai where we talk about how we built the next generation of TTS foundational model!

Let’s get started!!

AI Voice Samples

Do you know these personalities? (only for show purposes)*

The above are some of the voice cloned samples using our new model Candy.Two. Let us know if you can identify the voices in the comments section.

Launching New Suite of Speech Generation Models

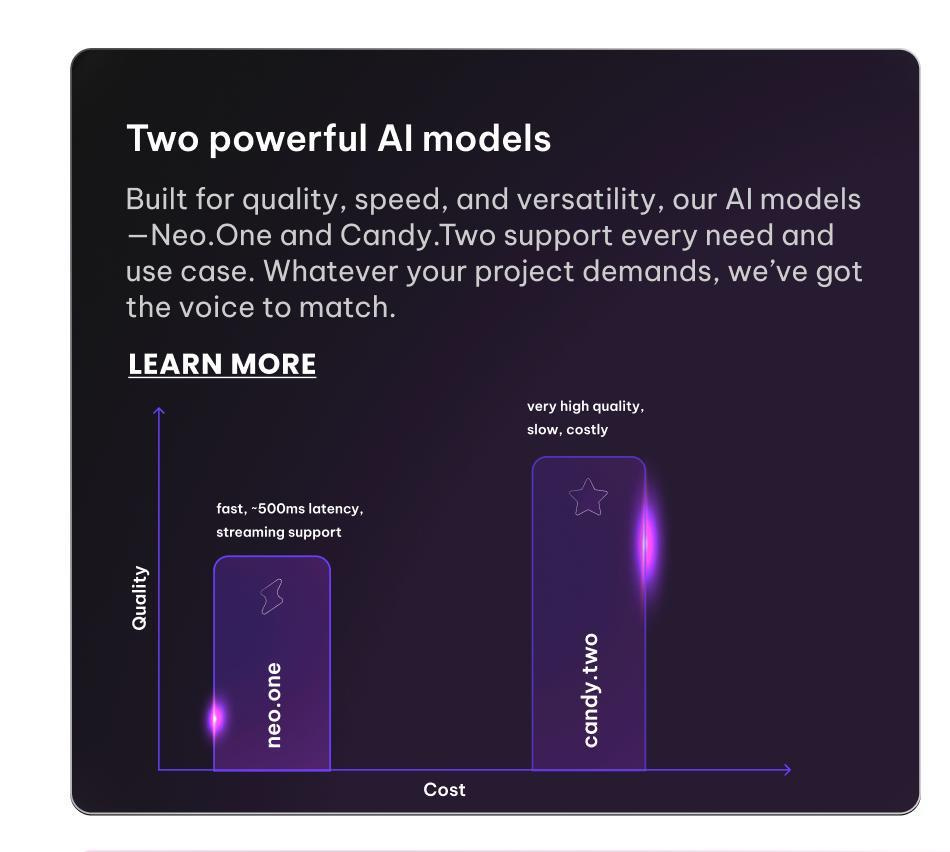

We are launching two class of models namely neo-one and Candy.Two, both serve two different purposes, if you are looking for voicebot like usecase where latency matters more than the quality neo-one is the goto solution.

If quality is the deal for you at the expense of latency Candy.Two offers it.

Let’s discuss these two class of models.

Neo.One

Taking in all the knowledge we had gathered by working on NeoDub and training it on our own proprietary collected speakers data, this model provides reliable and fast text to speech solution in 13 languages.

The Model architecture and details of experiments has been mentioned in this post.

Model parameters: 25M

Data size: 600 hours

Languages: 13 + 1 (accent english)

Candy.Two

The text-to-speech models are moving in the scaling direction with Self-Supervised Training Regime (learn more here) and candy-two is the new generation of TTS model which specifically trained for realistic quality at the expense of a lot of compute and data. we made the early version of candy-two open-source called MahaTTS early this year. candy-two is a more serious update to the MahaTTS model. This version is trained on 50k hours of data across N languages with change in the modelling approach for better quality and inference speed.

A quick summary of the models goes like:

candy-two consists of two models called M1(autoregressive) and M2(diffusion|Flow|Gan).

A given Dataset of audio speech is passed through wav2vec2 model to get the continuous embeddings, when we fit a K-means clustering on top of these embeddings, we get centroids which defines abstract phonemes, the number of centroid is 10000, by doing this we have increased the size of the phoneme dictionary which was max 200 incase of previous generation models and that too without any supervision. An audio can be tokenized using this technique to phoneme tokens.

The M1 model is trained to predict the next phoneme token given the text and the speaker embedding in an autoregressive manner. The speaker embedding is necessary because each text can be spoken in different variations depending on the speaker's style.

M2 model takes these speech tokens along with speaker embedding to generate the melspectogram of given phonemes in the voice of given speaker. For better generation we are currently using a diffusion model.

M2 model acts like RVC model and is a zero-shot voice cloning model, which can even generate singing voices which are not seen during training.

The melspectogram passes through BigVGAN to generate the audio waveform.

Model size: 1 Billion parameter across M1 M2

Data size: 50k hours

Samples:

Research and Engineering Alpha

You can divide speech into two components:

Semantics → concerned with the linguistics|message present in the speech.

Acoustics → concerned with the acoustic properties like voice identity and colour.

The choice of Semantic tokens is crucial for a good system, different tts systems have tried Wav2Vec2 and VQVAE for such experiments.

We decided to go with Wav2Vec2 because it results in way less pronunciation errors as compared to VQVAE tokens which also requires joint training. You can use pretrained Wav2Vec2 as well.The Acoustic Model’s first choice was diffusion for the ease of training and superior quality as compared to GANs output.

However Diffusion model requires a lot of compute to train and have a decent latency because of the denoising steps it take to generate the output.

Conditional Normalizing Flows (CNF), which provides faster training, inference and goes efficient on the compute. CNF model is a more generalized family of Diffusion models which follows a straight path(OT-CFM) in continuous time rather than a curved one (discrete DDPMs).We took heavy inspiration from tortoise-TTS in the begining to develop the model and found that using a newer architecture like Gemma or Llama is way more efficient than using gpt-2 model mainly because of the positional embeddings.

Gpt-2 uses learned positional embedding and takes lot of VRAM.While we trained Gemma with Rotary Position Embedding (ROPE), we applied ROPE extension in order to increase the context window of the model.

It worked well for the text input (as expected), as for Semantic tokens instead of increasing the context window size, the model change the rate of speech tokens which resulted in slower|faster speech. Understanding the positional embedding for audio tokens can be an interesting area of research.Adding a new language to the system requires at least 100hrs of data, Data should be well assembled in terms of diversity (at least 50+ speakers) and audio clip length (following a gaussian curve). This is mostly for M1 model, if you have a fairly imbalanced dataset you can hack it by loading a suggested distribution in the training batch.

The semantic tokens carries more information than only linguistics, it also captures the tonality and emotions of the speech.

The model fails to converge when trained on data less than 10,000 hours approx. Mostly because of the size of semantic tokens. One can try limiting the centroids to train it on a lower hours of data.

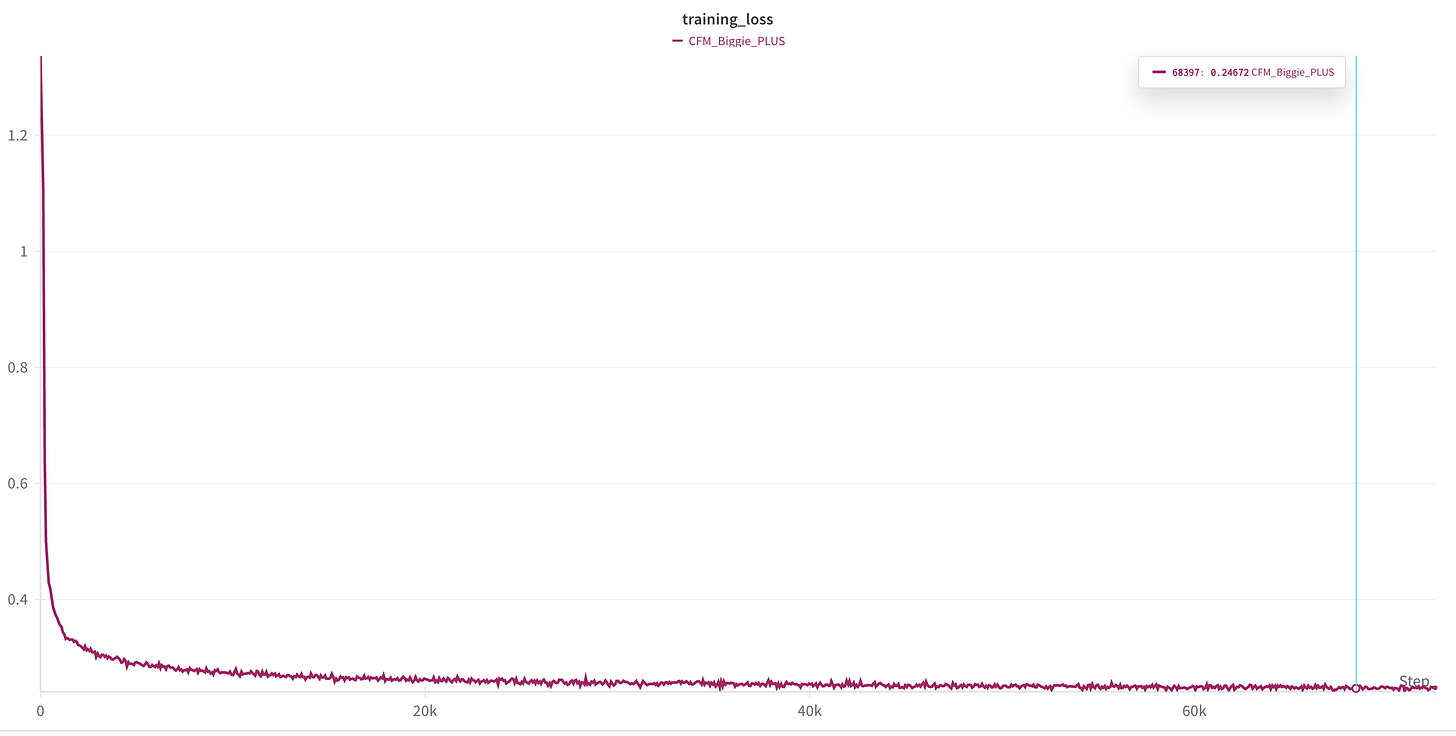

Below are the Training Loss Graphs:

CFM model trained on 50k hrs:

M1 model with different model sizes:

Future of Foundational Speech Models

Gpt-4o/AudioPalm has set a precedent for what the future of speech agents will look like when you have an M1 model as an LLM trained on the entirety of internet. Foundational speech models are moving in the same direction. Audio tokenization has enabled LLMs to become more interactive. The old approach cascading models like speech-to-text, text-to-text, and text-to-speech is shifting toward a single M1 (LLM) model for all tasks, with different tokenizers for each modality. LMMs (Large Multimodal Models) represent the next step towards fully functional speech agents. LMMs will also pave the way for effective dubbing and speech translations.

The value of data is at an all-time high and will continue to increase. In India, where language and culture change every 50-100 kilometers, this will be a fundamental challenge to address.

Checkout our open source efforts on the same by visiting MahaTTS.

Until next time,

Jaskaran Singh

Abhinay

Follow me for more Deep Learning content on Linkedin Twitter